Weakly Supervised Video Moment Localization with Contrastive Negative Sample Mining

Abstract

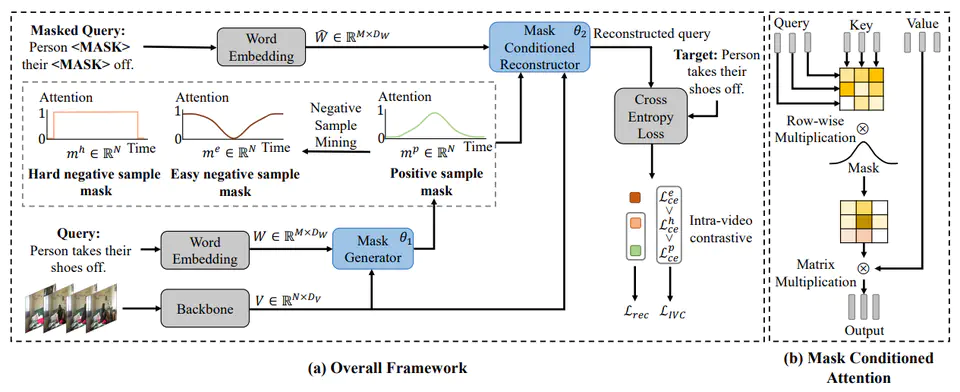

Video moment localization aims at localizing the video segments which are most related to the given free-form natural language query. The weakly supervised setting, where only video level description is available during training, is getting more and more attention due to its lower annotation cost. Prior weakly supervised methods mainly use sliding windows to generate temporal proposals, which are independent of video content and low quality, and train the model to distinguish matched video-query pairs and unmatched ones collected from different videos, while neglecting what the model needs is to distinguish the unaligned segments within the video. In this work, we propose a novel weakly supervised solution by introducing Contrastive Negative sample Mining (CNM). Specifically, we use a learnable Gaussian mask to generate positive samples, highlighting the video frames most related to the query, and consider other frames of the video and the whole video as easy and hard negative samples respectively. We then train our network with the Intra-Video Contrastive loss to make our positive and negative samples more discriminative. Our method has two advantages: (1) Our proposal generation process with a learnable Gaussian mask is more efficient and makes our positive sample higher quality. (2) The more difficult intra-video negative samples enable our model to distinguish highly confusing scenes. Experiments on two datasets show the effectiveness of our method. Code can be found at this https url.

Citation

@inproceedings{CNM_2022_AAAI,

title = {Weakly Supervised Video Moment Localization with Contrastive Negative Sample Mining},

author = {Zheng, Minghang and Huang, Yanjie and Chen, Qingchao and Liu, Yang},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

year = {2022}

}

Acknowledgement

This work is supported by Zhejiang Lab (NO.2022NB0AB05), State Key Laboratory of Media Convergence Production Technology and Systems, National Engineering Laboratory for Big Data Analysis and Applications Technology. The authors would also like to thank Jiabo Huang and Sizhe Li for helpful suggestions.